Overview of PoplarML

PoplarML revolutionizes ML deployment, offering swift and effortless integration of models into production environments. Its user-friendly interface and versatile compatibility make it a must-have tool for ML engineers.

How Does PoplarML Work?

PoplarML simplifies the deployment process by providing one-click solutions and real-time model inference via REST API endpoints. Its framework agnosticism ensures seamless integration with popular ML frameworks like TensorFlow, PyTorch, and Jax.

PoplarML Features & Functionalities

- One-click deployment

- Real-time inference invocation

- Framework agnostic

- User-friendly interface

- Versatile compatibility with popular ML frameworks

- Robust documentation (coming soon)

Benefits of Using PoplarML

- Accelerated deployment process

- Reduced engineering effort

- Scalable and production-ready ML systems

- Seamless integration with existing frameworks

- Enhanced model accessibility and usability

Use Cases and Applications

- Streamlining ML deployment pipelines

- Accelerating model iteration cycles

- Enabling real-time model inference for various applications

- Facilitating collaboration between data scientists and software engineers

Who is PoplarML For?

- ML engineers

- Data scientists

- Software developers

- Businesses seeking efficient ML deployment solutions

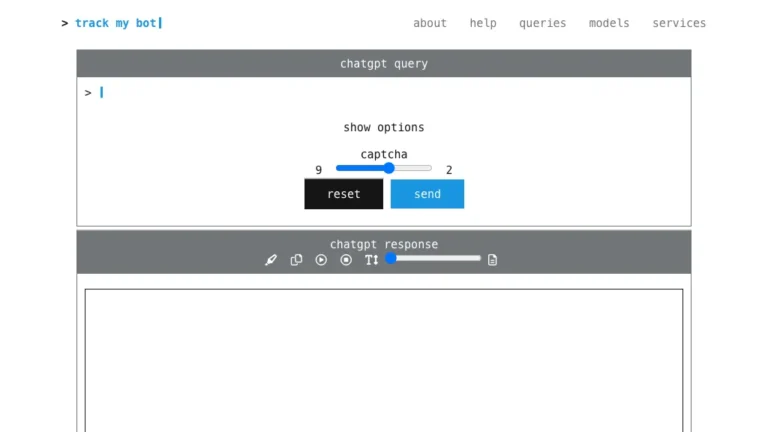

How to Use PoplarML

- Install PoplarML CLI tool.

- Deploy your model with a single click.

- Access real-time inference via REST API endpoints.

FAQs

- Is PoplarML compatible with all ML frameworks?

- Yes, PoplarML is framework agnostic and supports TensorFlow, PyTorch, and Jax models.

- Can I deploy models to production environments easily with PoplarML?

- Absolutely! PoplarML enables one-click deployment for seamless integration into production systems.

- Does PoplarML offer documentation for users?

- Yes, comprehensive documentation for PoplarML is on its way, providing detailed guidance for users.

- Can I access model inference in real-time with PoplarML?

- Yes, PoplarML facilitates real-time model inference through REST API endpoints, ensuring swift and efficient predictions.

- Is PoplarML suitable for scalable ML systems?

- Absolutely! PoplarML is designed to deploy production-ready and scalable ML systems with minimal engineering effort.

- What level of engineering expertise is required to use PoplarML?

- PoplarML is designed to be user-friendly, requiring minimal engineering effort for seamless deployment of ML models.

Conclusion

PoplarML emerges as a game-changer in the field of ML deployment, offering unparalleled speed, scalability, and simplicity. With its one-click deployment and real-time inference capabilities, PoplarML empowers ML engineers to focus on innovation rather than infrastructure.